SET NOEXEC is my new friend

Have never really played around with the option: SET NOEXEC ON

Turns out this can be a helpful way to validate larger batch scripts before actually making changes, to ensure compilation happens. If you choose, you can verify syntax by "parsing" in SSMS. However, this doesn't compile. Compilation checks more than your syntax. It actually validates the objects referenced exist.

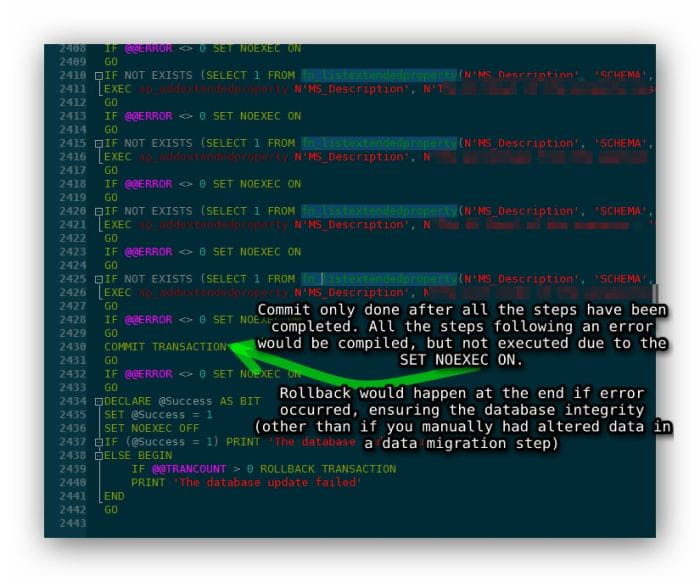

The execution of statements in SQL Server has two phases: compilation and execution. This setting is useful for having SQL Server validate the syntax and object names in Transact-SQL code when executing. It is also useful for debugging statements that would generally be part of a larger batch of statements. MSDN #188394 I previously had done this basic verification by running an estimated execution plan. This had the benefit of finding compilation errors as well. However, with large batch jobs it could be problematic and slow, as it had to process and return all execution plans for the statements, which I didn't need. Having this function can be a nice resource for validation of scripts before running, without the overhead of estimated execution plans. Additionally, you can handle transactional rollbacks and prevent cascading problems from happening when running a batch that changes your database by setting

NOEXEC ONwhen encountering an error. Red Gate SQL Compare does this elegantly:

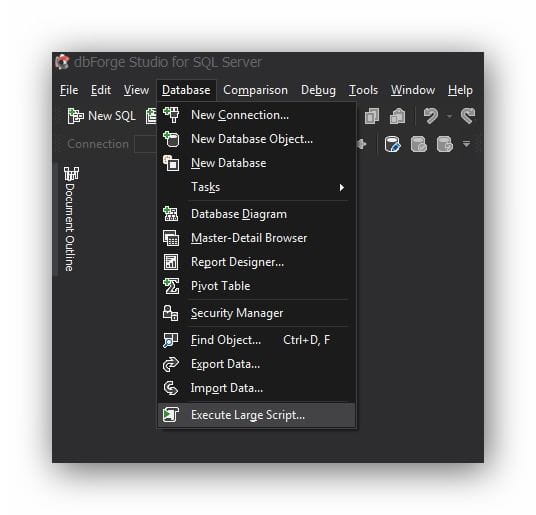

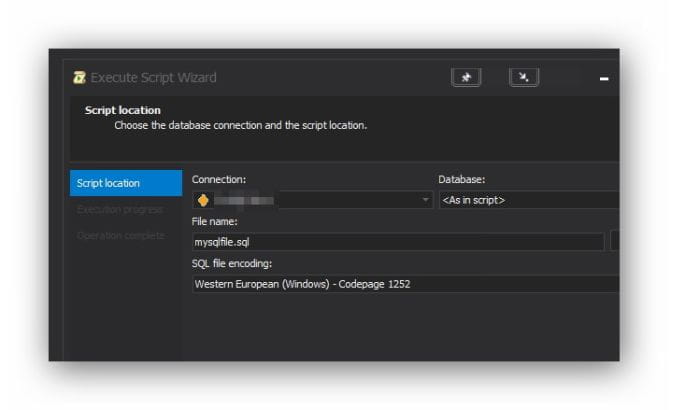

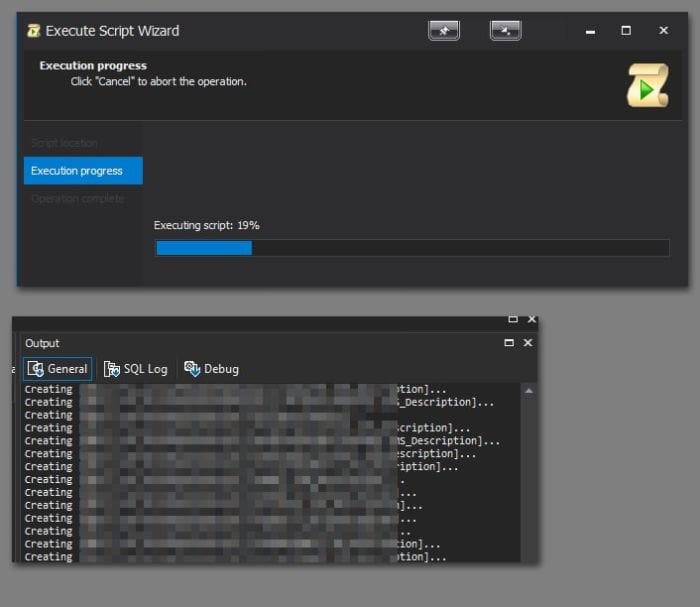

You can see the progress and the update in the output log, but the entire script isn't slowing down your GUI. In fact, you can just putter along and keep coding.

You can see the progress and the update in the output log, but the entire script isn't slowing down your GUI. In fact, you can just putter along and keep coding. Other options to accomplish the same thing include executing via SQLCMD, powershell, or breaking things up into smaller files. This just happened to be a pretty convenient option!

Other options to accomplish the same thing include executing via SQLCMD, powershell, or breaking things up into smaller files. This just happened to be a pretty convenient option!

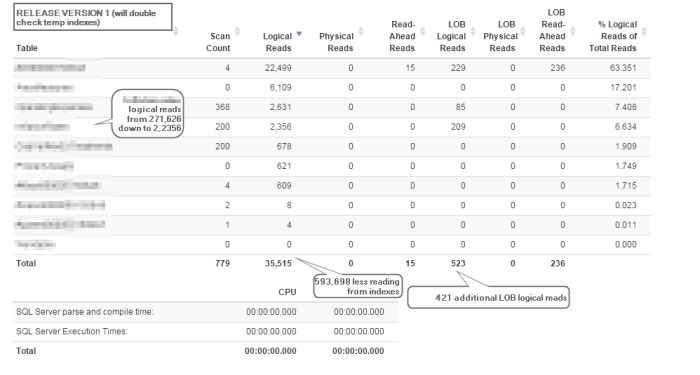

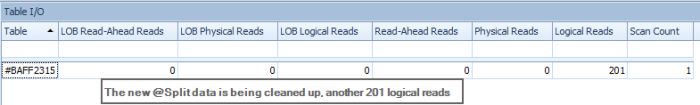

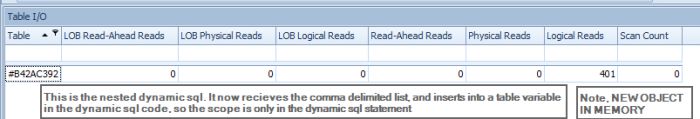

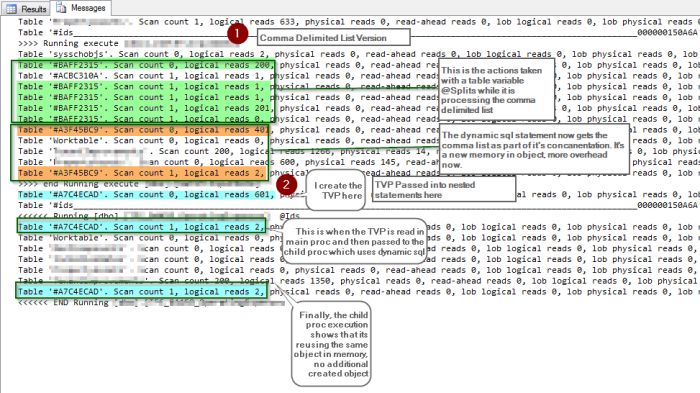

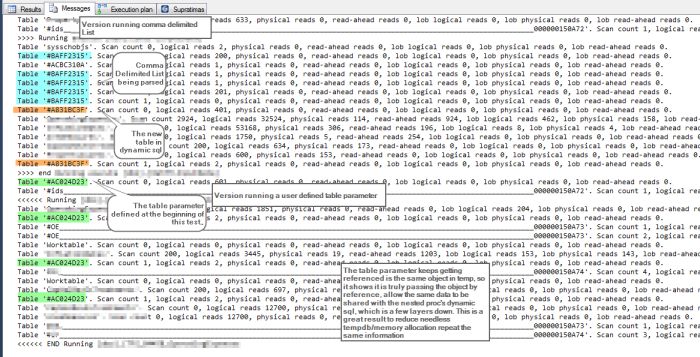

The footprint is reduced when dealing with IO from the child statement, because it keeps pointing to the same in memory object. I also validated this further by examining a more complex version of the same query that compares the comma delimited list against executing a nested stored procedure, which in turn has dynamic sql that needs the table parameter passed to it. The results of the review show successfully that it keeps pointing to the same temp object!

The footprint is reduced when dealing with IO from the child statement, because it keeps pointing to the same in memory object. I also validated this further by examining a more complex version of the same query that compares the comma delimited list against executing a nested stored procedure, which in turn has dynamic sql that needs the table parameter passed to it. The results of the review show successfully that it keeps pointing to the same temp object!

Another option I found interesting was the support for loading database hosted on a fileshare. Brentozar has an article on

Another option I found interesting was the support for loading database hosted on a fileshare. Brentozar has an article on